-

TIL:AI. Thoughts on AI

I use AI a lot for work, pretty much all day every day. I use coding assistants and custom agents I’ve built. I use AI to help code review changes, dig into bugs, and keep track of my projects. I’ve found lots of things it’s very helpful with, and lots of things it’s terrible at. If there’s one thing I have definitely learned: it does not work the way I imagined. And the more folks I talk with about it, the more I find it doesn’t work like they imagine, either.

This is a collection of various things I’ve learned about AI in the time I’ve spent working with it. It’s not exhaustive, and I expect to keep updating it from time to time as I learn more things and as things change.

An AI is not a computer. But an AI can use a computer. That is probably the most important lesson I’ve learned about these systems. A huge number of misconceptions about what AI is good for come from the assumption that it is a computer with a natural language interface. It absolutely is not that. It is a terrible computer in very much the same way that you are a terrible computer. It is pretty good at math, but it is not perfect at math in the way that a computer is. It is pretty good at remembering things, but it does not have perfect memory like a computer does.

AIs have a limited block of “context” that they can operate on. These range from a few tens of thousands of tokens up to around a million tokens. A token is a bit less than an English word worth of information, and a typical novel of a couple of hundred pages is on the order of 100,000 tokens. Even a moderately-sized project can be in the millions of tokens.And not all of the context is available for your task. Substantial parts of the context window may be devoted to one or more system prompts that instruct the model how to behave before your prompt even is looked at.

If you tell an AI “rename PersonRecord to Person everywhere in my codebase,” it sounds really straightforward. But an obvious way for the AI to do this includes reading all the files. That can overflow its context, and it may forget what it was working on. Even if it’s successful, it will be very slow. It’s very similar to asking an intern to print out all the files in the repository and go through each of them looking for PersonRecord and then retyping any file that needs changes. AI reads and writes very quickly, but not that quickly. They are not computers.

The better approach is to tell the AI “write a script to rename PersonRecord to Person, and then run that script.” This they can do very well, just like the intern. (I’m going to talk about interns a bit here.) Now it only needs to keep track of a small script, not every word of the entire repository. Scripts are fast and consistent. AIs are not. If you want an AI to use a computer, you often need to tell it to do so.

If you use a coding assistant, it may have a system prompt that tells the model about tools so you don’t have to. In Cline the majority of the 50KB prompt is devoted to explaining the available tools and when and how each should be used. Even though all the major models include extensive information about tools that exist, it is not obvious to AIs that they can or should use them. They have to be told. And in my experience, they often forget in the middle of a job. “Remember to use your tools” is a pretty common prompt.

Context windows are not like RAM. A common question is “can’t you just make the context window larger?” Basically, no. The size of the context window is set when the model is created. Essentially the model has a certain total size, and its size has a lot of impacts, both in cost to train and run, and even whether it works well or not. Making models bigger doesn’t always make them work better.

A part of that total size is the context window, the space where all the input text lives while the AI is working. This window isn’t some untrained chunk that can be expanded on the fly; it’s fully integrated into the model architecture, baked in during training. You can’t just bolt on more capacity like adding RAM or a bigger hard drive. And it’s a bit like human memory. Sometimes the AI forgets things or gets distracted, especially when you pack in too much unrelated stuff. Ideally, the AI should have just what it needs for the task, no more.

Context windows also include everything in kind of a big pile. When you upload a document to ChatGPT and type “proofread this,” there’s no deep separation between the document and the instruction. Even keeping things in order doesn’t come for free. It can be difficult for an AI to distinguish between which parts of the context it’s supposed to follow and which parts it’s supposed to just read. This allows prompt injection attacks, but even in more controlled settings, it can lead to unexpected behaviors.

Unlike SQL Injection, there’s no clear solution to this problem. You can add more structure to your prompts to make things clearer, but it’s a deep problem of how LLMs are designed today. Today the answer is mostly “guardrails,” which is basically “its secure, there’s a firewall” for AI. As a former telecom engineer and security assessor, this is the thing that makes me most ask “have we learned nothing?”

AIs do not learn. It is easy to imagine that a model like Claude is constantly adapting and learning from its many daily interactions, but this isn’t how AIs generally work. The model was frozen at the point it was created. That, plus its context, is all it has to work with. New information does not change the model day to day. And every time you start a new task, all of the previous context is generally lost. When you say “don’t do X anymore” and the AI responds “I’ll remember not to X in the future,” it’s critical to understand it has no built-in way to remember that.

In most systems, the only way for the AI to remember something is for it be written down somewhere and then read back into its context later. Think Leonard from Memento, and you’ll have the right idea. This leads to a bunch of memory tools that work in a variety of ways. It might be a human, writing new things into the system prompt. It might be a more persistent “session,” but most often it’s some external data store that the model can read, write, and search. It might be an advanced database, or they might just be a bunch of markdown files. But the key point is it’s outside the model. The model generally doesn’t change without a pretty big investment by the whoever maintains the model.

Even with the rise of memory systems, most interactions today have very limited memory. Systems like the Cline Memory Bank can work much better in theory than practice. It’s challenging to get AIs to update their memory without nagging them about it (kind of like getting people to write status reports). More advanced systems that provide backend databases don’t just drop in and work. You need to develop the agent to use them effectively. Even the most basic memory systems (long running sessions) require context management to keep things working smoothly. You should generally assume that tomorrow your AI will not remember today’s conversation well if at all.

AIs are like infinite interns. Rather than thinking of AIs as natural language interfaces to super-intelligent computers, which they are not, it can be helpful to think of them as an infinite pool of amazingly bright interns who all work remotely and you can assign any task for a week.

You can ask them to read things, write things in response to what they’ve read, write tools, run tools, do just about any remote-work task you like. But next week, this batch will be gone, and you’ll get a new batch of interns.

How should you manage them? You can ask them to read all your source code, but next week they’ll need to read it all again. You can ask them to read your source code and write explanations. That’s better. Then the next group can read the explanations rather than starting from scratch. But what if they misunderstand and write the wrong explanation? Then you’ve poisoned all your interns. They’ll all be confused. You need to read what they write and correct it. Better, maybe you should write the explanations in the first place. If you don’t know the system well enough to explain it to the interns, then you’re going to be in trouble. You’d better learn more. Maybe a bunch of interns could help you research?

You can assign them tasks, but remember, they’re interns. They’re really smart, but they’ve never really worked before. They know stuff, but not the stuff you need them to know. And they do not learn very well. How do you make them useful? You need to be pretty precise about what you want. They’re distractible. They don’t know how to coordinate their efforts, so even though you have infinite interns, there are only so many you can use together. It’s up to you to help them structure their work. Maybe you can train one of them to be in charge and organize (“orchestrate”) the others. Maybe you need someone to orchestrate the orchestrators. It’s starting to feel like system design.

Wait a minute. Wasn’t AI supposed to do all of this for me? Oh, sweet summer child. You thought that AI would mean less work? No. AI means leverage. You can get more out of your work, but you’ll work harder for it. You can get them to write you code, but you’ll spend that time writing more precise design specs. You can get them to write design specs, but you had better have your requirements nailed down perfectly. Leverage means you have to keep control. AI will take you very far, very fast. Make sure you’re pointed in exactly the right direction.

Reviewing AI code requires special care. When reviewing human-written code, we often look for certain markers that raise our trust in it. Is it thoroughly documented? Does it seem to be aware of common corner cases. Are there ample tests?

But AI is really good at writing extensive docs and tests. It takes a bit of study to realize that the docs are just restating the method signatures in more words, and the tests are so over-mocked that they don’t really test anything. And everything is so professional and exhaustive, it puts you in a mind that “whoever wrote this must have known what they’re doing.” And that has definitely bitten me. You can say “always be careful,” but when reviewing thousands of lines of code, you have to make choices about what you focus on.

It’s especially important because AI makes very different kinds of mistakes than humans make, and makes radically different kinds of mistakes than what you would expect given how meticulous the code looks. So knowing you’re looking at AI-generated code is an important part of reviewing it properly. AI is much more likely to do outrageous “return 4 here even though it’s wrong to make the tests pass” (and comment that they’re doing it!) than any human.

Conversely, AI is pretty good at reviewing code. I actually like it better as a code reviewer than a code writer, and I currently have it code review in parallel everything I review. It’s completely wrong about 30% of the time. And 50% of the time it doesn’t find anything I didn’t find. But about 20% of the time it finds things I missed, and that’s valuable. Just be careful about making it part of your automated process. That 30% “totally wrong and sometimes completely backwards” will lead junior developers astray. You need to be capable of judging its output.

I do find that AI writes small functions very well, and I use it for that a lot. I often build up algorithms piecemeal and by the time it’s done, it’s kind of a mess and I want to refactor it down into something simpler. One of my most common prompts is “simplify this code: …pasted function…” More than once in the process, it’s found corner cases I missed. And when it turns my 30-line function into 5 lines, it’s generally very easy to review.

AI is emergent behavior. Almost everything interesting about AI is due to behaviors no one programmed, and (at least today) no one understands. LLMs can do arithmetic, but they don’t do it perfectly, which surprises people. But what’s surprising is that they can do it at all. We didn’t “program” the models to do arithmetic. They just started doing it when they got to a certain size. When they “hallucinate,” it’s not because there’s a subroutine called

make_stuff_up()that we could disable. All of these things are emergent behaviors that we don’t directly control, and neither does the AI.We try, through prompting, to adjust the behaviors to align with what we want, but it’s not like programming a computer. “Prompt engineering” is mostly hunches today. Giving more precise prompts seems to help, but even the most detailed and exacting prompt may not ensure an AI does what you expect. See “infinite interns.” Or as Douglas Adams says, “a common mistake that people make when trying to design something completely foolproof is to underestimate the ingenuity of complete fools.”

AI does not understand itself. LLMs have no particular mechanism to self-inspect. It mostly knows how it works through its training set and sometimes through prompting. Humans also do not innately know much about the brain or how it works, but may have learned about it in school. Humans have no particular tool for inspecting what their brains are doing. An AI is in the same boat. When you ask an AI “why” it did something, it’s similar to asking a human. You may get a plausible story, but it may or may not be accurate. Sometimes there’s a clear line of reasoning, but sometimes there isn’t.

Similarly, asking an AI to improve its own prompts is a mixed bag. The only thing it really has to work with is suggestions that were part of its training or prompt. So at best they know what people told them would help, which mostly boils down to be “be structured and be precise,” which we hope will help, but doesn’t always.

AI is nondeterministic. Just because a prompt worked once does not mean it will work the same way a second time. Even with the same prompt, context and model, an LLM will generally produce different results. This is intentional. How random the results will be is a tunable property called temperature, so for activities that should have consistent behaviors, it can be helpful to reduce the temperature. Reducing the temperature prevents the model from straying as far from its training data, so setting it too low can make it unable to adapt to novel inputs.

But ultimately, if you need reliable, reproducible, testable behavior, AI alone is the wrong tool. You may be better off having an AI help you write a script, or to create a hybrid system where deterministic parts of the solution are handled by traditional automation, with an AI interpreting the results.

AI is changing quickly. I’ve tried to keep this series focused on things I think will be true for the foreseeable future. I’ve avoided talking about specific issues with specific tools, because the tools are changing at an incredible pace. That doesn’t mean they’re always getting better. Sometimes, they’re just different, and the trade-offs aren’t always clear.

But overall, things are getting better, and things that weren’t possible just a few months ago are now common practice. Agentic systems have been revolutionary, and I fully expect multi-agent systems to radically expand what’s possible. One-bit models may finally make it practical to run large models locally, which would also completely change the use-cases. I expect the landscape to be very different a year from now, and if AI does not solve a problem today, you should re-evaluate in six months. But I also expect a lot of things that I’ve said here to stay more or less the same.

AI is not a silver-bullet. It does not, and I expect will not, be an effective drop-in replacement for people. It’s leverage. Today, in my experience, it’s challenging to get easy productivity gains from it because it’s hard to harness all that leverage. I expect that to eventually change as the tools improve and we learn to use them better. But anyone expecting to just “add AI” and make a problem go away today will quickly find they have two problems.

-

My AI distractions, hopefully a lesson, but maybe just a story

I feel there’s definitely a blog post in here. I don’t want to get distracted from my testing series, but my head’s just churning and I need to write stuff, so maybe I’ll just sketch a story until I can turn it into something more coherent.

I’ve been working on this small program to convert close-scored choral music into separate rehearsal tracks. I currently do it by hand in MuseScore, but…come on. I’m a programmer. I build stuff.

But really I wouldn’t build this. It’s too much trouble. There’s so many little headaches dealing with music notation formats and seriously, it would save like 10 minutes at best every so often if I could get it working perfectly, and way less if there are any manuals steps. So, you know, it goes on the shelf?

But then I’m thinking that AI can help me do it quick. And then I’ll have a thing that I literally wouldn’t have built otherwise. And that’s a really great story. I’ve built a lot of small AI things at work. They’ve all been net-negative in terms of productivity, but I can see how we’d get to a worthwhile system. Every technology I’ve ever learned has been net-negative for a while. I’m used to that. Learning curves. And the tooling around AI is still in its infancy.

This just feels like a great use case. I don’t really know the format. I don’t know the frameworks. It’s going to be Python, which I kind of know, but it’s not my strength. And Claude 4 just came out. And I have Cline. And I pull out my wallet to buy some credits and let’s do this thing.

And it starts so well. So well. Like magic. It’s amazing. Cline is designing things to solve my problem and all I need to do is look over some code. I’m not quite “vibe coding,” but I’m not writing anything, and often I just let it go and review it when it finishes a phase. I design a whole project plan for it and it’s chewing through it.

Now Cline has some really annoying problems. And one of them is that it is incredibly inefficient cost-wise, because it constantly rewrites files from scratch rather than editing them. I finally get sick of burning cash and switch to Roo. And it’s like magic riding a unicorn. It is so much better. I am blown away. It is designing things and coding things and debugging things, and it’s amazing and glorious.

And I start to wonder…. should this be glorious? It doesn’t feel like that hard a problem. I mean, it has a bunch of little corner cases, but, still. It’s not that hard. But Roo has found a bug and is running it to ground with test after test after test. It’s tweaking things. It’s getting more tests to pass.

But wait a minute. What is this “more tests to pass?” It’s using passing tests as a measure of success. Moving from 2% passing to 10% passing makes it think it’s on the right path. But I realize it’s getting there by building one work-around after another, trying to make progress. Any time a method doesn’t return what it expects, it adds another fallback, trying approach after approach during every run, with a comment indicating “sometimes the framework doesn’t return the expected value.” It’s not experimenting and then using its experiment to drive the software. It’s putting everything it thinks might work in the function bracketed with

elseandcatchand hoping. At one point it literally tries to writes a functionfix_measure_29()where it just adjusts everything in one specific measure of one specific song so the test will pass. I rejected that change.At this point I’ve been working with it for around 2-2.5 days. I’m about $90 deep into tokens. We got to 80%-ish functionality really fast, but then stalled. The architecture is such a mess that every improvement takes forever and breaks other things. Claude constantly tells me it “works perfectly” when it has exactly the same bug as before. It constantly reaches for private APIs and internal properties. And it’s built so many fallbacks that nothing ever fails with a clear error. It’s built confidence values for each computation, with configurable options to let you override its heuristics. I told it the exact layout of the voice parts (soprano is part 1, voice 1; alto is part 1, voice 2; etc.) Even so, Claude is doing analysis on the note ranges to decide what notes go to what part. That’s a problem, because in this song the Soprano part goes well into the Tenor range. I didn’t ask for any of that.

The program has grown first to about 800 lines and now over 2800 lines. There’s a

ContextualUnifier, aSimpleSlurFixer, aStaffSimplifier, aVoiceAwareSlurFixer, aDeterministicVoiceIdentifier, aVoiceRemover, the list goes on. At first, I was amazed at all of the deep design documentation it was writing. There’s about 6000 words of design specs, bug analysis, project plans. It’s really quite amazing. But the program doesn’t work and I’m burning a lot of money.This morning, I thought I’d try something different. I started reading the docs for the library I’m using. Just…reading them. All of them. And then I kind of thought about the problem for a while. I asked Roo to scaffold me out a Python commandline tool, and write some loading logic. I started directing it to build a really simple app based on what I knew about how the framework is supposed to be used. It overcomplicated things, and I had to tell it to stop, and then just delete the code. But I got something working in a really simple way in maybe half an hour for about $1 worth of tokens. I used ChatGPT ($20/month Plus plan) to write a couple of small functions where I couldn’t remember the syntax in Python.

Eventually I just started coding things by hand. I probably spent 2-3 hours on it today. It’s 162 lines of Python so far, which feels like the right kind of size. It has a few things more I need to fix, but it mostly works, and I mostly understand the bits that don’t.

And I have no idea what lesson you’re supposed to take away from this little story. I know many will say “duh, magic autocomplete sux!” And if that’s your opinion, that cool, and I really don’t want to argue with you about it. But I don’t think it’s the right lesson for me. The right lesson for me is that AI is a weird tool and it’s changing incredibly quickly, and right now I don’t think we have any idea when to use it or how to use it. Three months ago, I didn’t see much promise at all. Then I played with my first agentic system and it changed my mind. Even in the total mess Claude made, I saw how it could work. I can see where the improvements can happen.

I’ve seen so many semi-programmers and even non-programmers build automation tools for themselves to make their lives easier. That’s real stuff. I want that for them. I may never write a shell script by hand again. AI is just too good at it and the stakes are so low. AI coding will matter and I think in the end, it’ll be a lot like high-level languages. They made more things possible and so we needed more programmers.

But today, maybe “I don’t need to read the docs; I have an AI” may not work out as smoothly as you’re hoping.

-

Why I struggle to use actors

Following up on some discussion about why I keep finding myself using Mutex (née OSAllocatedUnfairLock) rather than actors. This is kind of slapped together; eventually I may try to write up a proper blog post, but I wanted to capture it.

Each of these is a runnable file using

swift ..., with embedded commentary.// Actor to manage deferring execution on a bunch of things until requested // Seems a very nice use for an actor. public actor Defer { public nonisolated static let shared = Defer() private var defers: [@Sendable () -> Void] = [] public func addDefer(_ f: @escaping @Sendable () -> Void) { defers.append(f) } public func execute() { for f in defers { f() } defers.removeAll() } } // First attempt to use it is not surprisingly wrong. The call to `addDefer` is async because actor. public final class DeferUser: Sendable { init() { // Call to actor-isolated instance method 'addDefer' in a synchronous nonisolated context Defer.shared.addDefer { print("Cleanup") } } }OK, this makes sense. But making

DeferUser.initasync puts a lot of restrictions on callers of it. It means there can’t be asharedinstance. It can’t be deterministically constructed in AppDelegate or SwiftUI if anything relies on it. And there’s nothing inherentlyasyncabout constructing it. It’s leaking an implementation detail. “Just make everything async” is not where we should be going in Swift (and is not IMO where we intend to go).

Try 2. Maybe

addDefercould benonisolated:public actor Defer { public nonisolated static let shared = Defer() public private(set) var defers: [@Sendable () -> Void] = [] private func append(_ f: @escaping @Sendable () -> Void) { defers.append(f) } public nonisolated func addDefer(_ f: @escaping @Sendable () -> Void) { Task { await append(f) } } public func execute() { for f in defers { f() } defers.removeAll() } } // Sure. But now there's a surprising race condition: func run() async { Defer.shared.addDefer { print("Cleanup") } // print(await Defer.shared.defers.count) // Uncomment the next line to make this "work" await Defer.shared.execute() } await run()There is no promise that “Cleanup” will be printed until at least

ClosureIsolationis available.Taskwould also need to be updated I believe. This is a serious footgun IMO, and unlikely to be fixed for some time. (For more, see previous Mastodon discussion.)In my experiments, this will never print “Cleanup” unless you “pump the runloop” (to mix an old-school metaphor) by tickling some other async property.

The problem is worse if you move the Task into

DeferUser.initpublic actor Defer { public nonisolated static let shared = Defer() public private(set) var defers: [@Sendable () -> Void] = [] public func addDefer(_ f: @escaping @Sendable () -> Void) { defers.append(f) } public func execute() { for f in defers { f() } defers.removeAll() } } public final class DeferUser: Sendable { init() { // Even with future-Swift, I doubt folks will realize this needs to be put on the Defer context. Task { await Defer.shared.addDefer { print("Cleanup") } } } } // I expect many people will reach for this version, and it's even more unsafe IMO than the last one. func run() async { _ = DeferUser() // print(await Defer.shared.defers.count) // Uncomment the next line to make this "work" await Defer.shared.execute() } await run()This one IMO is worse than the last one because even with “future Swift,” I doubt folks will figure out how to get this Task onto the right context to remove the race condition.

So even with future Swift, I have to think really hard about this.

OR….

I can use a mutex and it all works in a way that I can reason about.

import os public final class Defer: Sendable { public static let shared = Defer() private let defers = OSAllocatedUnfairLock<[@Sendable () -> Void]>(initialState: []) public func addDefer(_ f: @escaping @Sendable () -> Void) { defers.withLock { $0.append(f) } } // If I want this to be async, it can be by putting the `for` loop in a Task. // Or it can be synchronous. The choice is up to me rather than being forced by the actor. public func execute() { // Yes, this requires some special care to do correctly. But `withLock` points a big arrow to // the piece of code I need to think hard about. let defers = defers.withLock { defers in defer { defers.removeAll() } return defers } for f in defers { f() } } } public final class DeferUser: Sendable { init() { Defer.shared.addDefer { print("Cleanup") } } } // And now it should "just work." func run() async { _ = DeferUser() Defer.shared.execute() } await run() -

Dropped messages in for-await

Swift concurrency has a feature called

for-awaitthat can iterate over an AsyncSequence. Combine has a.valuesproperty that can turn Publishers into an AsyncSequence. This feels like a perfect match! But it is surprisingly subtle and makes it very easy to drop messages if you’re not careful.Consider the following example (full code is at the end).

// A NotificationCenter publisher that emits Int as the object. // (Yes, this is an abuse of `object`. Hush. I'm making the example simpler.) let values = nc.publisher(for: name) .compactMap { $0.object as? Int } .values // Loop over the notifications... right? for await value in values { // At this point, nothing is "subscribed" to values, so messages will be dropped until the next loop begins. // ... Process notification ... }This feels right, but it’s subtly broken and will drop notifications. AsyncPublisher provides no buffer. If nothing is subscribed, then items will be dropped. This makes sense. Imagine if

.valuesdid store all of the elements published until they were consumed. Then if I failed to actually consume it, it would leak memory. (We can argue about the precise meaning of “leak” here, but still, grow memory without bound.)Just creating an AsyncPublisher shouldn’t do that. Nothing else works like that in Combine or in Swift Concurrency. An AsyncStream is a value. You should be able to stick it in a variable to use later without leaking memory.(Ma’moun has made me rethink this. It’s true that this is how it works, but I’m now torn a bit more on whether it should.)Similarly, the fact that

for-awaitdoesn’t create a subscription makes sense. In what way would it do that? That’s not how AsyncSequence works. Its job is to callmakeAsyncIterator()and then repeatedly callnext(). It doesn’t know about buffering or Subscriber or any of that. And saymakeAsyncIterator()could take buffering parameters. Where would they go in thefor-awaitsyntax?The answer to all of this is that you need a buffer, and it’s your job to configure it. If you want an “infinite” buffer (which is what people usually think they want), then it looks like this:

let values = nc.publisher(for: name) .compactMap { $0.object as? Int } .buffer(size: .max, prefetch: .byRequest, whenFull: .dropOldest) // <---- .valuesAnd IMO this probably is the most sensible way to solve this, even if the syntax is a bit verbose. Obviously we could add a

bufferedValues(...)extension to make it a little prettier….BUT….

Yeah, nobody remembers this, even if they’ve heard about it before.

.valuesis just so easy to reach for. And the bug is a subtle race condition that drops messages. And you can’t easily unit test for it. And the compiler probably can’t warn you about it. And this problem exists in any situation where an AsyncSequence “pushes” values, which is basically every observation pattern, even without Combine.And so I struggle with whether to encourage

for-await. Every time you see it, you need to think pretty hard about what’s going on in this specific case. And unfortunately, that’s kind of true of AsyncSequence generally. I’m not sure what to think about this yet. Most of my bigger projects use Combine for these kinds of things currently, and it “just works” including unsubscribing automatically when the AnyCancellable is deinited (another thing that’s easy to mess up withfor-await). I just don’t know yet.ADDENDUM

I strangely forgot to also write about

NotificationCenter.notifications(named:), which goes directly from NotificationCenter to AsyncSequence. It’s a good example of the subtlety. It has the same dropped-messages issue:// Also drops messages if they come too quickly, but not as many as an unbuffered `.values`. let values = nc.notifications(named: name) .compactMap { $0.object as? Int } for await value in values { ... }Unlike the Combine version, I don’t know how to fix this one. (Maybe this should be considered a Foundation bug? But maybe it’s just “as designed?”) After experimenting a bit, I believe the buffering policy is

.bufferingNewest(8). If more than 8 notifications come in during your processing loop, you’ll miss some. Should you send notifications that fast? Maybe not? I don’t know. But the bugs are definitely subtle if you do.Here’s the full code to play with:

@MainActor struct ContentView: View { let model = Model() var body: some View { Button("Send") { model.sendMessage() } .onAppear { model.startListening() } } } @MainActor class Model { var lastSent = 0 var lastReceived = 0 let nc = NotificationCenter.default let name = Notification.Name("MESSAGE") var listenTask: Task<Void, Error>? func sendMessage() { lastSent += 1 nc.post(name: name, object: lastSent) } // Set up an infinite for-await loop, listening to notifications until canceled. func startListening() { listenTask?.cancel() listenTask = Task { var lastReceived = 0 let values = nc.publisher(for: name).values .compactMap { $0.object as? Int } for await value in values { // At this point, nothing is "subscribed" to values, so messages will be dropped. let miss = value == lastReceived + 1 ? "" : " (MISS)" print("Received: \(value)\(miss)") lastReceived = value // Sleep to make it easier to see dropped messages. try await Task.sleep(for: .milliseconds(500)) } } } deinit { listenTask?.cancel() } } -

Externalizing properties to manage retains

I have a very simple type in my system. It’s just an immutable struct:

public struct AssetClass: Sendable, Equatable, Hashable, Comparable { public let name: String public let commodities: [String] public let returns: Rates public static func < (lhs: AssetClass, rhs: AssetClass) -> Bool { lhs.name < rhs.name } public static func == (lhs: AssetClass, rhs: AssetClass) -> Bool { lhs.name == rhs.name } public func hash(into hasher: inout Hasher) { hasher.combine(name) } }It has a unique name, a short array of symbols that are part of this asset class, and a couple of doubles that define the expected returns. My system uses this type a lot. In particular, it’s used in the rebalancing stage of monte carlo testing portfolios. There are only 5 values of this type in the system, but they are used tens of millions of times across 8 threads.

They’re value types. They’re immutable value types. So no problem. Except…

…

???

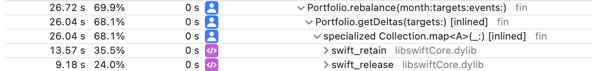

Yes, across all threads,

swift_retainandswift_releasein this one function represent over half of my run time (wall clock, that’s about 12 seconds; the times here count all threads).But… structs. It’s all structs. Why? What?

Most Swift Collections (Array, String, Dictionary, etc.) in most cases store their contents on the heap in a reference-counted class. This is a key part of copy-on-write optimizations, and it’s usually a very good thing. But it means that copying the value, which happens many times when it’s passed to a function or captured into a closure, requires a retain and release. And those have to be atomic, which means there is a lock. And since this value is read on 8 threads all doing basically the same thing, there is a lot of contention on that lock, and the threads spend a lot of time waiting on each other just for reference counting.

In this case, none of the String values are a problem. Both the name and all the commodity symbols are short enough to fit in a SmallString (also lovingly called “smol” in the code). Those are inlined and don’t require heap storage.

But that

commoditiesArray. It’s small. The largest has 5 elements, and the strings are all 5 bytes or less. But it’s on the heap, and that means reference counting. And it isn’t actually used anywhere in the rebalancing code. It’s only used when loading data. So what to do about it?How about moving the data out of the struct and into a global map?

public struct AssetClass: Sendable, Equatable, Hashable, Comparable { // Global atomic map to hold commodity values, mapped to asset class names // All access to `commodities` is now done through .withLock private static var commodityMap = OSAllocatedUnfairLock<[String: [String]]>(initialState: [:]) public init(name: String, commodities: [String], returns: Rates) { precondition(Self.commodityMap.withLock { $0[name] == nil }, "AssetClass names must be unique: \(name)") self.name = name Self.commodityMap.withLock { $0[name] = commodities } self.returns = returns } public let name: String public var commodities: [String] { Self.commodityMap.withLock { $0[name, default: []] } } public let returns: Rates // ...And just like that, 30% performance improvement. Went from 12s to 8s.

This is a sometimes food. Do not go turning your structs inside out because Rob said it was faster. Measure, measure, measure. Fix your algorithms first. This slows down access to

commodities, so if that were used a lot, this would be worse. And it relies on AssetClass being immutable. If there are setters, then you can get shared mutable state when you don’t expect it.But…for this problem. Wow.

There’s more to do. Even after making this change, retain and release in this one function are still over 50% of all my runtime (it’s just a smaller runtime). But at least I have another tool for attacking it.

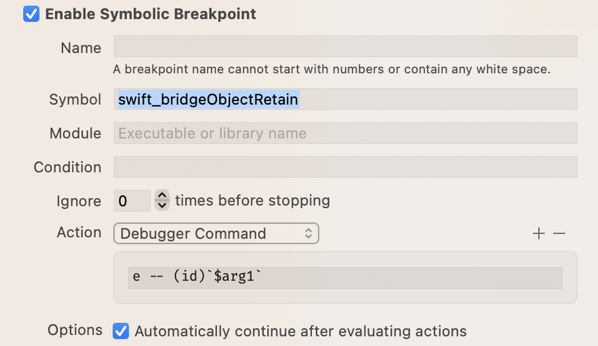

Oh, and how did I know what kinds of objects were being retained? Try this as a starting point:

Be warned: this is a lot of output and very slow and the output is going to tell you about low-level types that may not have obvious correlation to your types, but it’s a starting point.

OK, back to Instruments. I should definitely be able to get this under 5 seconds.

-

A short anecdote on getting answers on the modern internet

This post is way off topic, and a bit in the weeds, and maybe even a bit silly, which is why I’m throwing it here rather than getting back to making my proper blog work. I really will do that, but… ok, not today.

I’ve been trying to learn some abstract algebra. I never studied it in school, and sometimes I bump into people describing operations in “group-speak” (throwing around words like “abelian” and “symmetric group”), and I’d like to try to keep up with what they’re saying even if I probably will never do an actual proof in anger.

Anyway, I got myself confused about the meaning of “order” when talking about groups vs elements. It’s the kind of dumb “how could you not know this at this point in the course?” question that you’re embarrassed to ask (and so I blog about it because I have an under-developed sense of shame). So… let’s ask some LLMs! And also search engines. And how would I figure this out?

Good news: it took longer to write this up than to fix my misunderstanding. OK news: nothing lied to me (well, much). Bad news: well, we’ll get to that as it comes up.

Here’s the exact text I stuck into a bunch of things:

Is the order of a group the lcm of the order of its elements?

To be clear to anyone who knows abstract algebra: I was confused and thought this might be the definition of order. I was misunderstanding the point of Lagrange’s theorem. To anyone who doesn’t know abstract algebra, the correct answer is just “the number of elements in the group.” It really is that simple, and I was making it really over-complicated.

Kagi

Kagi has become my go-to search engine. I like the model of “I pay you, and you provide a service, that’s it, that’s the whole business model.” It gave me several links that weren’t particularly useful (basically ones that prove Lagrange’s theorem, which assume you already know the answer to my question).

But the third link was to a Saylor Academy text book, which answered my question:

In group theory, a branch of mathematics, the term order is used in two closely-related senses:

• The order of a group is its cardinality, i.e., the number of its elements.

• …

OK, that’s cleared up.

Am I done? Of course not! I had to dig through three or four links, most of which confused me more, before I finally found the link to an open-source textbook, where I could go double-check the definition. The internet should be better than that!

Other search engines

To quickly summarize other search engines (because you’re all familiar with those, and of course the point is to get to LLMs):

Duck Duck Go – (OK) Second link was Wikipedia, which gives the answer in the first sentence. For completeness, searching for the same question on Wikipedia directly also gets you right to the page.

Google – (Bad) The Q&A section gives several links to different questions on Stack Exchange, Quora, and Studysmarter.us (?). Importantly, the second answer Google highlights is excerpted as: “Yes, in fact, the order of an element in a finite group…” (The “…” is in the Google excerpt.) You have to carefully notice that this is the answer to a different question. If you read quickly, you could easily walk away thinking “yes” is the answer. This is a big problem with search engine excerpting IMO. If you scroll down past the Q&A section, the first (unrelated) math.stackexchange answer, the “People also ask” section, and then two more links, you will finally come to the Wikipedia article that does answer the question and is where I should have started anyway.

Bing – (Good!) The highlighted answer links to Math.SE, and is extremely useful. It provides this directly on the search page:

No. For instance if G = Z2 ⊕ Z2, then | G | = 4, but the least common multiple of the order of it’s group elements is 2.

Bing added the bold on “No.” This is the only search that directly answered my question, with citation, in one step. The Math.SE link is a bit funny, though. Five years ago, someone had almost exactly the same question as I did. It was answered in a couple of useful ways, had an accepted answer, and then was closed as off-topic. (!?!?!) This question does not show up in most web searches (other than Bing) and does not show up (at least not prominently) when I put my search directly into math.se.

Bing definitely won this one. Also, of course, just going to Wikipedia.

The LLMs - Bing

OK, but we all know what this was really about. How do the LLMs handle this kind of question? Well, let’s start with Bing, since it answered the question so well through search.

Me: is the order of a group the lcm of the order of its elements?

Bing: No, the order of a group is not necessarily the least common multiple of the order of its elements. For instance, if G = Z2 ⊕ Z2, then |G| = 4, but the least common multiple of the order of its group elements is 2.

Precisely the same answer as in the “quick answer” from search, except this time it’s plagiarism. It is word-for-word the answer from Aweygan on math.se, but gives no link, citation, or even hint that this isn’t original content.

Maybe I could get the citation by asking?

Me: What’s the source of your answer?

Bing: My answer is based on my internal knowledge and information. I am programmed to provide accurate and reliable information to the best of my ability.

O_o

Yeah.

ChatGPT

And of course I must check ChatGPT:

No, the order of a group is not necessarily the least common multiple (lcm) of the orders of its elements.

The order of a group is the number of elements in the group. On the other hand, the order of an element in a group is the smallest positive integer n such that the element raised to the nth power is the identity element of the group.

Hey, that’s actually really good. Just the right about of extra information. I’d like citations of course, but just being right about basic facts without plagiarism is pretty good (after all, the math.SE answer doesn’t provide citations, either). I like this. This is good.

And it goes on:

It is true that the order of an element divides the order of the group, by Lagrange’s theorem. However, the lcm of the orders of the elements may be larger than the order of the group. For example, consider the cyclic group of order 6 generated by a. The group contains elements of orders 1, 2, 3, and 6. The lcm of these orders is 6, but the order of the group itself is only 6.

Therefore, the order of a group cannot be expressed in terms of the lcm of the orders of its elements, in general.

Ah, a counter-example where the lcm is larger than the order. Very helpful. In the case where the order is six and the lcm is…six?

Oh ChatGPT. If only you’d quit while you were ahead.

-

A Reminder About NSRange and String

The NSRange for a full string is

NSRange(location: 0, length: (text as NSString).length). You cannot usetext.countfor this. NSRanges on strings are based on UTF-16 code points, not Swift Characters. A character like 👩👩👧👧 has a.countof 1, but a.lengthof 11. ObjC-based NSRange APIs (even ones ported to Swift) need the latter. Swift interfaces (which use Range rather than NSRange) need the former.Whenever possible, avoid NSRange. But when it’s needed, make sure to use it correctly. It’s very easy to make mistakes that break on non-ASCII, and especially on emoji.

(Side note:

(text as NSString).lengthis the same astext.utf16.count. I don’t have a strong opinion on which to use. In both cases, future developers may get confused and try to “fix” the code back totext.count.(text as NSString)is nice because it ties it back to this being an NSString API and is easier IMO to see that something special is happening here, but it also looks like an auto-conversion barnacle..utf16.countis shorter and calls out what is really happening, but I almost always type.utf8.countout of muscle-memory and it’s hard to see the mistake in code-review. So do what you want. A comment is probably warranted in either case. I hate NSRange in Swift…) -

Big-O matters, but it's often memory that's killing your performance.

We spend so much time drilling algorithmic complexity. Big-O and all that. But performance is so often about contention and memory, especially when working in parallel.

I was just working on a program that does Monte Carlo simulation. That means running the same algorithm over the same data thousands of times, with some amount of injected randomness. My single-threaded approach was taking 40 seconds, and I wanted to make it faster. Make is parallel!

I tried all kinds of scaling factors, and unsurprisingly the best was 8-way on a 10-core system. It got me down to…50 seconds?!?!? Yes, slower. Slower? Yes. Time to pull out Instruments.

My first mistake was trying to make it parallel before I pulled out Instruments. Always start by profiling. Do not make systems parallel before you’ve optimized them serially. Sure enough, the biggest bottleneck was random number generation. I’d already switched from the very slow default PRNG to the faster GKLinearCongruentialRandomSource. The default is wisely secure, but slow. The GameKit PRNGs are much faster, but more predictible. For Monte Carlo simulation, security is not a concern, so a faster PRNG is preferable. But it was still too slow.

Why? Locking. GKLinearCongruentialRandomSource has internal mutable state, and is also thread-safe. That combination means locks. And locks take time, especially in my system that generates tens of millions of random values, so there is a lot of contention.

Solution: make the PRNG a parameter and pass it in. That way each parallel task gets its own PRNG and there’s no contention. At the same time, I switched to a hand-written version of xoshiro256+ which is specifically designed for generating random floating-point numbers. Hand-writing my own meant that I know what it does and can manage locking. (I actually used a struct that’s passed

inoutrather than locking. I may test out a class + OSAllocatedUnfairLock to see which is faster.)Anyway, that got it down to 30s (with 8-way parallelism), but still far too slow. Using 8 cores to save 25% is not much of a win. More Instruments. Huge amounts of time were spent in retain/release. Since there are no classes in this program, that might surprise you, but copy-on-write is implemented with internal classes, and that means ARC, and ARC means locks, and highly contended locks are the enemy of parallelism.

It took a while to track down, but the problem was roughly this:

portfolio.update(using: bigObjectThatIncludesPortfolio)bigObjectincludes some arrays (thus COW and retain/release) and includes the object that is being updated. Everything is a struct, so there’s definitely going to be a copy here as well. I rewroteupdateand all the other methods to take two integer parameters rather than one object parameter and cut my time down to 9 seconds.Total so far from cleaning up memory and locks: >75% improvement.

Heaviest remaining stack trace that I’m digging into now:

swift_allocObject. It’s always memory… -

Pull Requests are a story

I’ve been thinking a lot about how to make PRs work better for teams, and a lot of my thinking has gone into how to show more compassion for the reviewer. Tell them your story. What was the problem? What did you do to improve it? How do you know it is a working solution (what testing did you do)? Why do you believe it is the right solution? Why this way rather than all the other ways it might be solved?

Code does not speak for itself. Even the most clear and readable code does not explain why it is necessary, why you are writing it now. Code does not explain why it is sufficient. The problem it solves lives outside the program. The constraints that shape a program can only be inferred. They are not in the code itself. When we want others to review our coding choices, we have to explain with words. We have to tell our reviewers a story.

And that brings me to the most important writing advice I’ve ever been taught. If you want to write well, you must read what you wrote. There’s an old saying that writing is rewriting, but hidden in that adage is that rewriting is first re-reading.

The same is true of PRs and code review. Before you ask another person to review your code, review it yourself. See it on the screen the same way they will. Notice that commented-out block and the accidental whitespace change. Is refactoring obscuring logic changes? If you were the reviewer, what kinds of testing (manual or automated; this isn’t a post about unit testing) would make you comfortable with this change?

Maybe you need to do the hard work of reorganizing your commits (and checking that your new code is precisely the same as your old code!). But maybe you just need to explain things a bit more in the PR description. Maybe a code-walkthrough is needed. Or maybe it really is an obvious change, and your reviewer will understand at once. There’s no need to over-do it. Let compassion and empathy lead you, not dogmatic rules.

And remember that compassion and empathy, that feeling of being in another person’s place, when it’s time for you to be the reviewer.

-

Solving "Required kernel recording resources are in use by another document" in Instruments

So you have a Swift Package Manager project, without an xcodeproj, and you launch Instruments, and try to profile something (maybe Allocations), and you receive the message “Required kernel recording resources are in use by another document.” But of course you don’t have any other documents open in Instruments and you’re at a loss, so you’ve come here. Welcome.

(Everything here is from my own exploration and research over a few hours. It’s possible there are errors in my understanding of what’s going on, or there’s a better solution, in which case I’d love to hear from you so I can improve this post.)

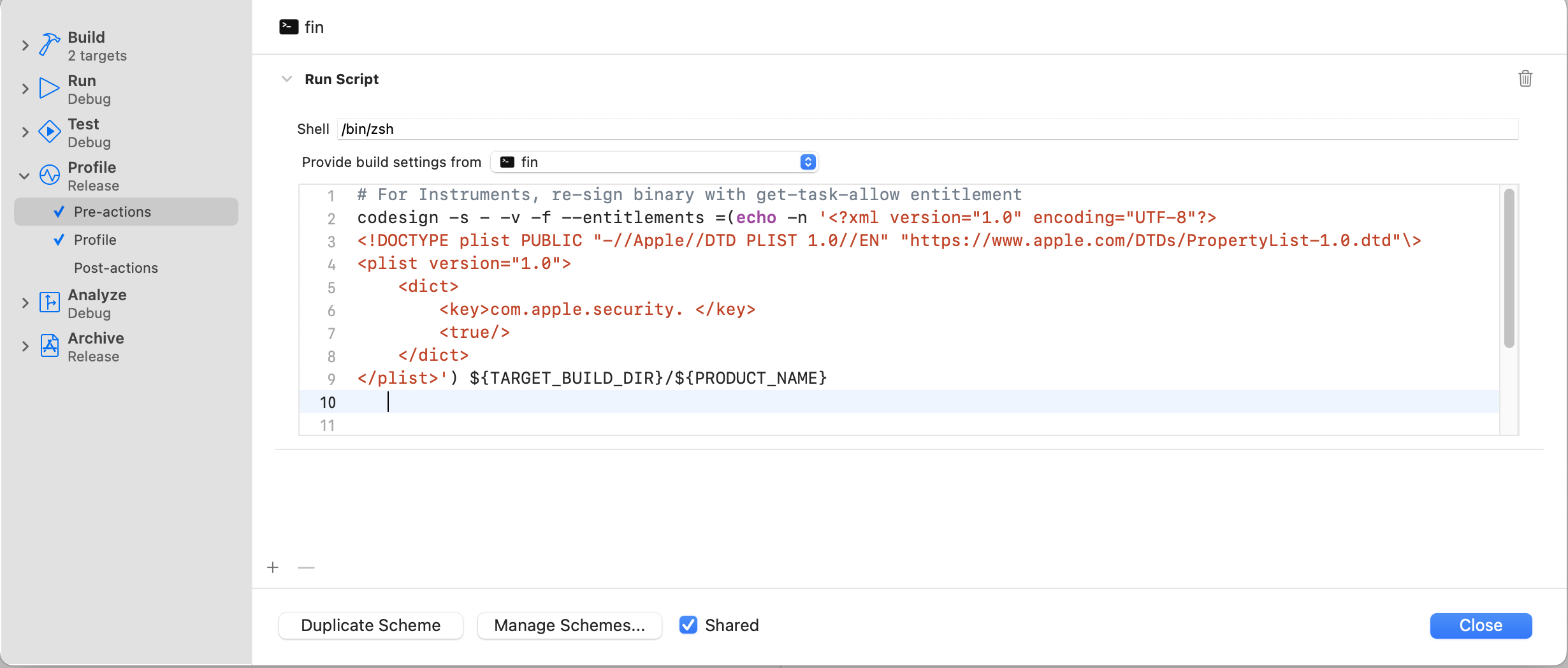

First, this error message has nothing to do with the actual error. The real error is that your binary doesn’t have the

get-task-allowentitlement. I believe this is because it’s a release build, and SPM doesn’t distinguish between “release” and “profiling.” So you need to re-sign the binary.Edit your scheme (Cmd-Shift-,) and open the Profile>Pre-actions section. Add the following to re-sign prior to launching Instruments. Set your shell to

/bin/zsh(this won’t work with bash).

# For Instruments, re-sign binary with get-task-allow entitlement codesign -s - -v -f --entitlements =(echo -n '<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "https://www.apple.com/DTDs/PropertyList-1.0.dtd"\> <plist version="1.0"> <dict> <key>com.apple.security.get-task-allow</key> <true/> </dict> </plist>') ${TARGET_BUILD_DIR}/${PRODUCT_NAME}The funny

=(...)syntax is a special zsh process subtitution that creates a temporary file containing the output of the command, and then uses that temporary file as the parameter. Note the-non the echo. It’s required that there be no trailing newline here.This script will be stored in

.swiftpm/xcode/xcshareddata/xcschemes/<schemename>.xcscheme.You might think you could just have a plist in your source directory and refer to it in this script, but pre-action scripts don’t know where the source code is. They don’t get SRCROOT.

Also beware that if there’s a problem in your pre-action script, you’ll get no information about it, and it won’t stop the build even if it fails. The output will be in Console.app, but other than that, it’s very silent.

So this is a mess of a solution, but I expect it to be pretty robust. It only applies to the Profile action, so it shouldn’t cause any problems with your production builds.

You can also switch over to using an xcodeproj, but… seriously? Who would do that?

-

Time for something new

So, all my tweets about C++ and Rust brings me to a minor announcement. After nearly 6 years with Jaybird, learning so much about Bluetooth on iPhones and custom firmware and audio, it’s time for something new. Luckily, Logitech is large enough that I can change jobs without changing my health insurance.

In October I’m moving to the Logitech Software Engineering team. My exact projects are still up in the air, but I’ll likely work mostly on desktop products and interfacing with peripherals, particularly for gaming. Lots of C++ and thinking about Rust. Some Objective-C. Some Electron. Maybe a little Swift, but probably not right away.

I love Swift, but it’s become almost everything I do for the last few years, and it’s gotten me into a silo where it’s all I really know well. I’m really looking forward to branching out again.

-

OK, I just fell in 😍 with stdlib’s

Result(catching:).extension Request where Response: Decodable { func handle(response: Result<Data, Error>, completion: (Result<Response, Error>) -> Void) { completion(Result { try JSONDecoder().decode(Response.self, from: response.get()) }) } } -

Reminder: If you’re running into limitations with default parameters (for example, the value can’t be computed, they can’t specialize a generic, defaults don’t exist in literals), you can always replace a default with an explicit overload.

func f(x: Int = 0) {}is the same as

func f(x: Int) {} func f() { f(0) }

subscribe via RSS